Jose Manuel Flores

Sounds originate from diverse sources—music, noise, and language. In speech, accent quality integrates intensity and tonal quantity. Sound morphology encompasses the structural and organizational facets of sound, involving its configuration, development, and attributes within temporal or spatial contexts.

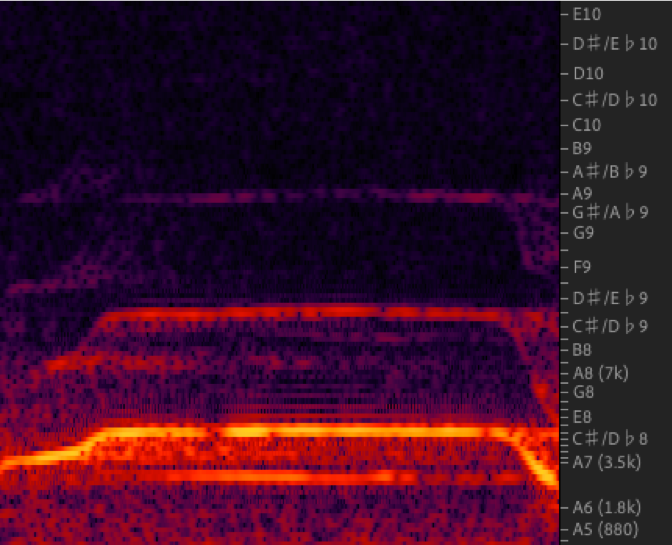

Inherent to sound, the syllable serves as a foundational unit composed of phonemes, engaging two consecutive sound perceptions and muscular actions. Additionally, phonic groups are situated amid pauses, segregating sounds within intervals of silence. Applied within the domain of sound studies, soundscape morphology focuses on modifications within sound groups, arranged similarly in time or space. Despite sound’s invisibility, its essence can be captured using technology. Spectrograms or waveforms translate sound into visual depictions, facilitating a detailed examination of its morphology. This analysis unveils essential physical attributes like pitch, duration, intensity, and timbre.

First, pitch refers to the perceived frequency of a sound wave and determines whether a sound is perceived as high or low. Denotes the musical height contingent upon vibration frequency—whether high or low. It encompasses intonation, representing a word or phrase’s musical ascent or descent. Pitch is closely tied to the frequency of sound waves. Higher-frequency waves are perceived as higher-pitched sounds, while lower-frequency waves are interpreted as lower-pitched sounds. Frequency is measured in Hertz (Hz), with higher frequencies corresponding to higher pitches.

Although pitch is closely related to frequency, the perception of pitch is subjective and can be influenced by individual hearing abilities, cultural factors, and personal experiences. In music, pitch is fundamental to melody and harmony. Different pitches create distinct musical notes, and arranging these notes in specific sequences forms melodies, chords, and harmonies. Pitch is organized into octaves, where doubling or halving the frequency corresponds to moving up or down an octave. This pattern allows for a wide range of pitches across the audible spectrum.

Humans can typically perceive pitches within a specific range, from the lowest frequencies (infrasound) to the highest frequencies (ultrasound) that our ears can detect. Our perception of pitch is influenced by both physiological factors, like the mechanics of the ear, and psychological factors, such as our cognitive interpretation of sound stimuli. In language, pitch plays a crucial role in conveying meaning beyond words. Changes in pitch, known as intonation, can signal questions, emphasis, or emotional nuances in speech.

On the other hand, duration refers to the length of time a sound persists. It’s a fundamental characteristic that contributes to our perception and understanding of auditory information. Taking into account the absolute duration of sound it refers to the specific time duration of a sound measured in milliseconds, seconds, or minutes. Relative duration, on the other hand, involves understanding a sound’s length in relation to other sounds or intervals of silence within a sequence.

Duration significantly influences how we perceive and interpret sounds. Longer durations might suggest sustained or continuous sounds, while shorter durations might indicate abrupt or transient sounds. As well, duration can convey emotions or meanings. For instance, a longer duration in a musical note might evoke a sense of calmness or serenity, while a shorter, abrupt sound might create tension or surprise. In different contexts, duration carries varying degrees of importance. In music, for instance, the duration of notes and rests (silences) contributes to rhythm, tempo, and overall musical structure. Humans perceive duration differently based on various factors, including cultural influences, individual auditory processing, and the context in which the sound is heard. Tools like spectrograms or waveforms assist in measuring and visualizing the duration of sounds, enabling detailed analysis and comparison. Understanding the duration of sounds contributes to various fields such as music, linguistics, psychology, and technology, shaping our perception and interaction with the auditory world.

Intensity in sound refers to the energy or power carried by sound waves. It measures the force of suction and the amplitude of vibration. Intensity is the amount of energy transmitted through a unit area perpendicular to the direction of sound propagation. It’s measured in decibels (dB) and relates to the loudness or strength of a sound. Also, corresponds to our subjective perception of loudness. A higher-intensity sound is perceived as louder, while a lower-intensity sound is quieter. However, perception of loudness is not solely determined by intensity; factors like frequency and duration also play roles.

Intensity follows a logarithmic scale. This means that a small change in intensity corresponds to a large change in perceived loudness. For instance, a sound at 60 dB is perceived as twice as loud as a sound at 50 dB. The threshold of hearing represents the lowest intensity level at which a sound can be detected by the human ear. It’s typically around 0 dB for average human hearing at certain frequencies. At the other end of the scale is the threshold of pain, which represents the intensity level beyond which sound becomes physically uncomfortable or even painful. This threshold varies among individuals.

Intensity measurements are crucial in occupational safety to assess potential hearing damage caused by prolonged exposure to high-intensity sounds, as well as in engineering to design products with appropriate sound levels. Sound level meters are used to measure intensity levels in various environments. These devices provide real-time readings and spectral analysis to understand the distribution of sound energy across different frequencies.

Intensity diminishes as sound travels through a medium due to spreading out over a larger area. This is known as the inverse square law, where the intensity decreases with the square of the distance from the source. Understanding intensity is vital in fields like acoustics, engineering, environmental science, and health, influencing how we assess, mitigate, and regulate sound levels for various purposes and contexts.

Timbre signifies a specific high or low note duration, quantifiable numerically, and exists in absolute and relative forms, the latter defined concerning other sounds. It is often described as the color or quality of a sound and encompasses the unique characteristics that differentiate two sounds, even if they share the same pitch and intensity.

Timbre refers to the complex quality of a sound that distinguishes it from pure tones. It’s what allows us to differentiate between various instruments or voices producing the same note at the same volume. Arises from a sound’s harmonic content, which includes its overtones or harmonics. These additional frequencies beyond the fundamental pitch create the richness and complexity unique to each sound source.

Timbre perception is subjective and often difficult to describe. It’s influenced by factors such as the shape, material, and construction of the sound source, as well as playing technique or voice characteristics.

Different musical instruments produce distinct timbres due to variations in their construction, materials, and playing techniques. For example, a guitar and a piano playing the same note sound different due to their inherent timbral qualities.

Similarly, individual voices have their own timbral characteristics. This is why we can often distinguish between different singers even when they’re singing the same melody. Timbre can carry emotional connotations or associations. For instance, a warm and mellow timbre might evoke a sense of calmness or nostalgia, while a sharp and edgy timbre might induce tension or excitement. In electronic music, timbre is a crucial element in shaping unique and diverse sounds. Sound designers manipulate timbre using synthesizers and effects to create new and innovative auditory experiences. Analyzing timbre involves examining the spectrum of frequencies that compose a sound. Synthesizing timbre involves manipulating these frequency components to create new and diverse sounds. Understanding timbre helps musicians, audio engineers, and psychologists explore the richness and diversity of sound perception, leading to innovations in music, technology, and our understanding of auditory experiences.

Understanding the intricacies of sound qualities and sound morphology unveils a captivating realm of auditory exploration. Delving into the depths of pitch, duration, intensity, and timbre reveals not just the physical attributes of sound, but a tapestry of emotions, meanings, and expressions intricately woven into every auditory experience. Sound morphology, with its focus on the structural and organizational facets of sound, invites us to decipher the language of soundscapes—arrangements in temporal and spatial dimensions that transcend mere noise. As we decode the symphony of elements within sound, from syllables to phonic groups, we witness the convergence of science and art, unveiling the richness and complexity that lie beneath the surface of what we hear. It is a journey that not only enriches our comprehension of the world of sound but also deepens our connection to the profound essence of human expression and communication.